Saito Tech Update: June 28, 2021

The tech team has been hard at work for the past two sprints. There is a lot to share, but if you don’t want to wade into a technical discussion the short version is that we are on-track and expect to release our Rust Client as scheduled.

Rather than just provide vague-reassurances that things are going well, in this post I’ll try to go a bit deeper to give more insight into how we are operating and what problems we are dealing with and how we are solving them. And with that in mind, the first thing to mention is that under Clay and Stephen’s leadership tech has gone through an organizational change that is professionalizing our dev environment. We have shifted towards test-driven development and a process of having peer-review on major commits to the codebase. This is pretty standard for larger teams: it has involved changes to how development happens that make it harder for any of us to break things or make arbitrary changes without flagging them with other members of the team first.

In terms of coding focus, Saito Rust has been our #1 priority and we’ve gotten a lot done in the past two sprints. Most of the components in the Saito system have now been implemented in Rust code: block production, storage/retrieval of blocks from disk, mempool management of transactions and blocks, tracking of longest-chain state for blocks in the blockchain, our algorithm for adding blocks to the blockchain, burn fee calculations, signature validation, utxoset management and more. Note that Rust has restrictive policies about memory management and “ownership” so these bits of code are not necessarily working in production. We tend to code and then integrate. The major components we have not tackled in any significant way are network and peer management, automatic transaction rebroadcasting and the staking mechanism.

In general, our development effort has tried to have open-ended development cycles followed by a discussion of what works and doesn’t, followed by focused implementation of the consensus approach into core software. Doing things this way has allowed us to find out: (1) what practical issues we run into implementing the logic in Rust, and (2) what suggestions contributors have on improving Saito. Being open to making changes to the way Saito works has slowed us down because it has meant discussions are about more than just implementing an algorithm that already exists in javascript, but it has also led to some pretty clear wins for which Clay and Stephen deserve almost all of the credit:

- an upgrade to the default hashing algorithm from SHA256 to BLAKE3 that will significantly speed up the overall data-throughput of the network; this is really significant — hashing is the single-biggest bottleneck in the classic Saito implementation.

- an optional “pre-validation” step in block-processing that avoids the need for many blocks to even have data inserted into critical blockchain indices until we are sure they are part of a competitive chain; this speeds up work by avoiding the need for it in all but critical cases.

- a change to the way that slips are formatted that eliminates the need for lite-wallets to track the blockchain after they have made a transaction: users will be able to spend their change slips as soon as they have created them; there are follow-on benefits that make it easier to spend ATR transactions here as well.

- various proposals that effectively shrink the size of Saito transactions considerably when they are saved to disk or sent over the network, including a roughly 40% savings in the size of transaction slips which will allow us to pack more transactions into the same space on the blockchain.

Looking back at the last two months, I think we’ve spent more time exploring various implementation ideas that will not be implemented than I would have expected, but believe the payoff has been worth it in the sense that we are implementing improvements we did not conceptualize as part of this effort. The overall result is that the codebase is probably a bit behind where I expected we would be now in terms of raw features, but it is also cleaner and simpler and development is happening faster than expected too. The hardest part has been sorting out a design process where people can communicate effectively about proposed changes.

In terms of actual development milestones, we’re expecting to finish the classic version of the Saito protocol (no-networking) within 1-2 more weeks. And then tackle peer connections, the ATR mechanism, and then the staking mechanism in turn. This should afford time for testing before live-net deployment.

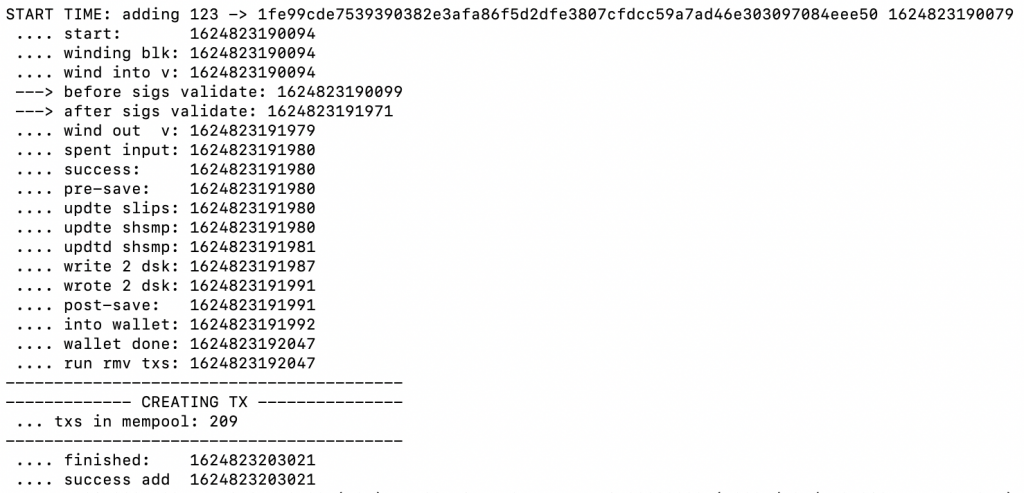

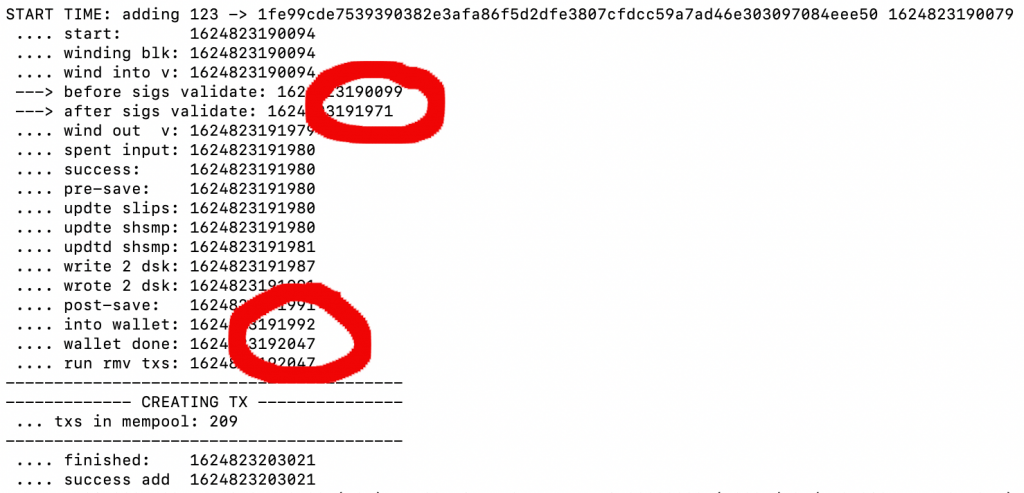

So what are the numbers on raw scalability? A good place to start is probably this screenshot above, generated by an earlier non-Rust version of Saito I fired up to generate some competitive numbers. What you see here is a test script creating an arbitrary number of fairly mammoth transactions and throwing them into an earlier Saito implementation to check how long it takes for the engine to process them. This is a back-of-the-envelope way to sanity-check our performance by seeing how different kinds of blocks (a few big transactions? masses of small ones?) bring the engine to its knees. This specific screenshot shows a smaller number of data-heavy transactions taking about 2 seconds to process. This is what you should see:

There are three critical bottlenecks that affect our non-Rust implementation: (1) saving blocks to disk, (2) loading blocks from disk, and (3) validating blocks (and transactions). We can’t see the delays in blocks getting saved here because that is happening asynchronously and behind-the-scenes. But we can see that hashing transaction-affixed data and checking those transaction signatures is taking a huge amount of processing time. There are some less obvious lessons too — it is interesting to know that our Google Dense Hashmap is not even breaking a sweat in handling UTXO updates but that our wallet is already starting to struggle, suggesting that core routing nodes shouldn’t even do that work by default.

One of the dirty secrets in the blockchain space is that network speed is the most critical bottleneck for scale. This is one of the reasons the world actually needs Saito, since “routing work” pays for fast network connections and that is ultimately the only way to achieve scale. But the more time we can shave off other work the more time we can afford to waste on network latency, so our focus in terms of core blockchain performance has been on those three critical bottlenecks: (1) saving blocks to disk, (2) loading blocks from disk, and (3) validating blocks (and their transactions).

Without solving any of those issues, what the overall numbers from non-Rust implementations tell us is that any javascript client is going to hit a wall somewhere around 400 MB per minute, and probably sooner if they start getting spammed by malicious nodes. This is useful to know because terabyte-scale requires around 1.5GB per minute so Rust needs to deliver big gains. It is also useful to have raw numbers because while some people think javascript isn’t a performant language this isn’t true — what you see here is *optimized* code that does things like swap-in precompiled C binaries in performance-critical areas like handling the UTXO set.

So how does Rust look right now? In javascript we use JSON and store a lot of data in formats like “human-readable strings” that are slower to read and write from disk. In Rust we are eliminating those and focusing on pure byte-streams to speed-up performance. Most importantly, we are dividing the work of “validating” transactions across the number of CPU cores that a computer has \available. Rust is almost uniquely better suited to parallelization here for some complicated reasons.

And the Rust numbers are much better. In terms of baseline numbers, we are seeing evidence to suggest 2x speed improvements on writing-to-disk and loading-from-disk. The savings here don’t come from the speed of interacting with the hard drive but rather the conversion between the resulting byte-streams and the in-memory objects that can be processed by the software. Saving to disk is not a really critical bottleneck, but loading from disk (or the network) is and so performance gains here are really critical to being able to rapidly validate and forward blocks. Clay in particular has been doing a lot of heavy lifting in pushing us towards better and faster data serialization and deserialization. It’s hard to share hard numbers because serialization work is still underway. We think that 50% improvement in block loading speed is possible.

What about transaction validation? In testing this aspect of system performance, we’ve mostly been dealing with test blocks in the 10 MB to 1 GB range with anywhere from 1000 to 100,000 transactions in total (~300k slips). We don’t really know how much data users will associate with transactions so the differences here are less about approximating reality and more about finding out — given the need to process a metric ton of transactions — how long block validation takes at different transaction sizes. Doing this has helped us learn – for instance – that for most practical transaction sizes a lot more time is spent hashing than verifying the resulting transaction signature. And UTXO validation? That continues to be a cakewalk. Possibly because we are using best-of-breed tools instead of the sort of databases that POS networks drift towards we won’t need to optimize the speed of updating our UTXO hashmap for a long-time.

And the good news here is that Rust is delivering 100% on parallelization. While we can use various techniques to add “parallel processing” to transaction validation in other languages, many require creating multiple “instances” of blocks for separate CPU threads to process individually. Rust is superior for its avoidance of this issue, meaning that once our block is in memory we can throw as many CPUs at transaction validation as needed. For all practical purposes, this makes transaction validation a non-issue. The process still takes a considerable amount of time compared to everything else we are doing, but the performance limit here is the number of CPU threads available, and that is scalable to something like 64-cores TODAY on commercial hardware.

We’re excited to get to the point where benchmarking is becoming realistic and useful. We don’t really want to share numbers broadly on everything quite yet because we haven’t finalized serialization and ATR and routing work obviously have an effect on the performance, but if you are really curious you can always download and run the code and see for yourself. The most important thing for us is that we’ve got a solid foundation for our own team in submitted patches and proposing design changes, and that decisions are being made in terms of actual numbers. Does this make things faster? So we think things are pretty solid.

Our biggest goal for the next 2 weeks is to finish what we consider the classic implementation of Saito. This involves finalizing what versions of algorithms are included by default in the “basic” version and particularly how the mining components interact with block production. After that we will move on with more advanced features (automatic transaction rebroadcasting, staking).

There is lots more to do, but also lots of progress. As always, if you’re interested in checking out what is happening for yourself or joining us and tackling some development, please feel welcome to track or clone our Saito Rust repository on Github. We have a dev channel in Saito Discord where questions and discussions are more than welcome too. Hope to see you there.